In the world of machine learning, models are only as good as the data they are fed. This is where feature engineering in machine learning comes into play, the method of converting raw data into meaningful inputs that assist algorithms in learning patterns more efficiently.

In simplified terms, it’s about developing the appropriate “features” (or variables) so that your model can generate better predictions.

Feature engineering serves as a bridge between raw data and intelligent insights. Even the most advanced algorithms, such as deep neural networks and ensemble models, might suffer if their input data is not properly formatted.

Well-designed features highlight the most significant aspects of your dataset, enabling the model to identify trends, correlations, and nuances that might otherwise go unnoticed.

On the other hand, bad feature engineering might lead to major problems. Models may have low accuracy, fail to generalize well, or fall into the trap of overfitting, in which they perform spectacularly on training data but badly on fresh, unknown data. In short, without robust feature engineering, even powerful machine learning approaches might give poor or unreliable results.

In this article, I will discuss the basics of feature engineering, effective feature engineering techniques to follow, and some feature engineering best practices to help you build better models.

What Is Feature Engineering in Machine Learning?

The process of transforming unstructured data into meaningful features that a machine learning model can comprehend is known as feature engineering.

Put otherwise, it involves converting intricate, unstructured data into a format that emphasizes the most important aspects for tasks like categorization or prediction.

Feature engineering is situated in the middle of the machine learning pipeline, between data collection and model training.

The data must be organized into a collection of relevant variables, or “features,” that reflect the underlying patterns in the dataset after it has been cleaned.

The model makes decisions and learns associations based on these attributes. Even the most sophisticated algorithm might not be able to produce insightful results without this phase.

Let’s look at a few common instances. Let’s say that a “date of purchase” is within your dataset. Rather than utilizing the date in its raw form, you can divide it into distinct elements like day, month, year, or even day of the week, which could indicate behavioral or seasonal patterns.

Likewise, if your data contains text reviews, you may turn these into TF-IDF scores or word counts, transforming qualitative data into numerical values that models can understand.

It’s also critical to comprehend how feature engineering connects to two commonly confused ideas: feature extraction vs feature selection.

Feature extraction is the process of generating new features from preexisting data, frequently using tools like Principal Component Analysis (PCA) or embedding approaches that preserve meaning while compressing knowledge.

To avoid noise or redundancy, feature selection, on the other hand, is about picking the most pertinent features from your collection. To put it briefly, selection filters the features that extraction produces.

Effective feature engineering is built on these procedures, which together guarantee that the data your model sees is not only clean but also full of insight and predictive potential.

Why Feature Engineering Is Essential for Better Models

It is not feasible to exaggerate the importance of feature engineering, which is frequently an invisible element that distinguishes ordinary models from outstanding ones.

The constructed characteristics are what define how valuable information the model can actually gather, even though algorithms provide the learning of its structure.

In a sea of noise, great features essentially function as powerful signals that direct the model toward precise predictions and a more profound understanding.

When you develop thoughtful features, you are aiding the model in comprehending the issue. For instance, supplying raw transaction data alone is insufficient for a credit scoring system.

But the algorithm gets a better picture of a person’s financial behavior if you engineer features like average monthly expenditure, payment delays, or credit usage ratio. This helps the model estimate credit risk more precisely.

Similar to this, including attributes like item similarity, purchase frequency, and user engagement time can significantly improve model performance in recommendation systems by better tailoring recommendations.

Accuracy, interpretability, and generalization are three essential components of a strong machine learning model that are also influenced by feature engineering.

Well-crafted features increase generalization by assisting the model in performing well on unseen data, accuracy by capturing the appropriate patterns, and interpretability by making model conclusions easier to comprehend.

Ineffective feature engineering can cause models to pick up on unimportant noise, which can result in subpar performance in the real world, even when training accuracy appears to be high.

In simple words, the quality of the features your algorithm learns from determines how well it performs, regardless of how sophisticated your algorithm is.

Better data outperforms better algorithms, as data scientists frequently assert. Intelligent, well-designed features are the real cornerstone of any successful machine learning endeavor.

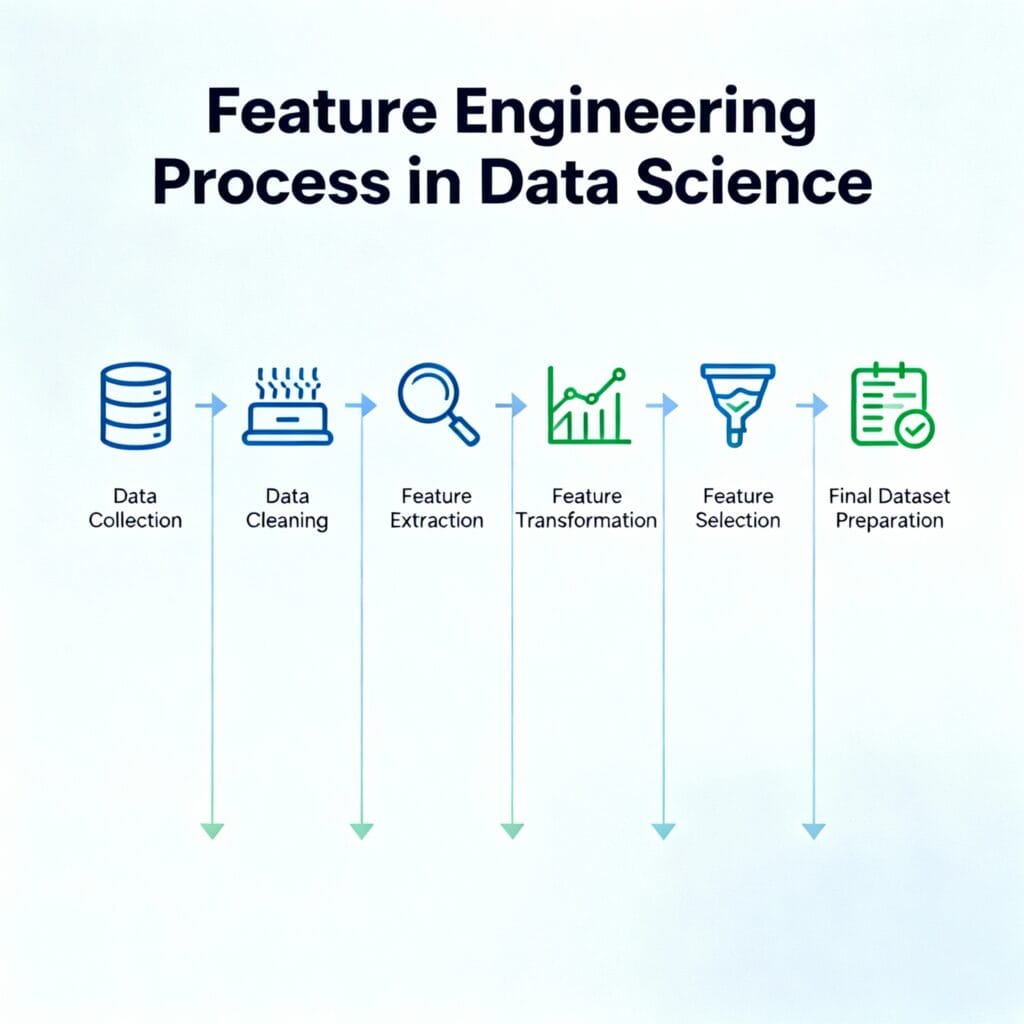

The Feature Engineering Workflow (Step-by-Step Process)

Feature engineering is a systematic, iterative process that transforms data from unreliable and raw to refined and model-ready.

Applying the appropriate feature engineering techniques at the appropriate moment is made easier when you comprehend each phase. To make the procedure more understandable, let’s dissect it step by step using examples.

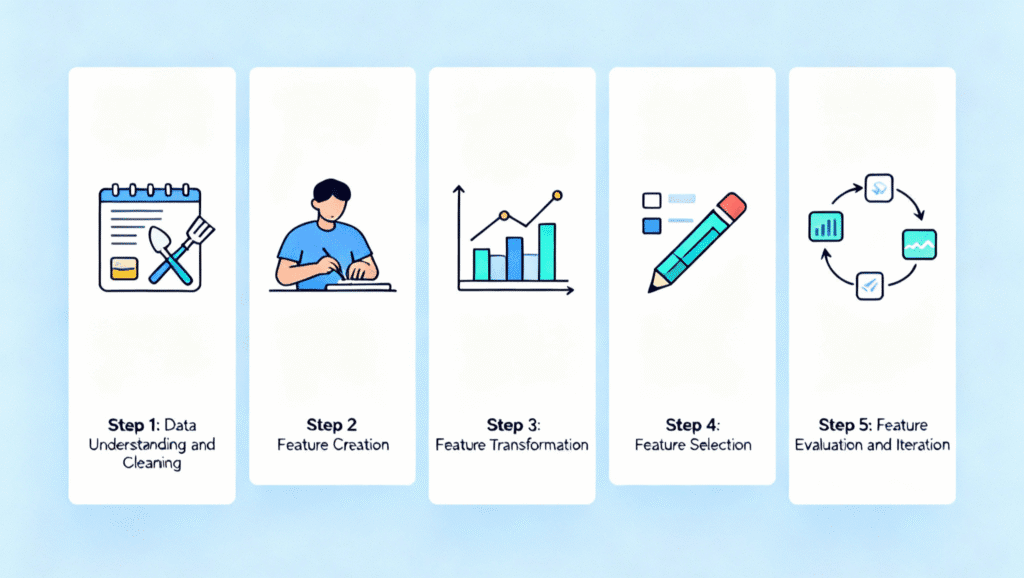

Step 1: Data Understanding and Cleaning

Great data is the foundation of any great model. Evaluate your dataset first, learning what each column means, looking for irregularities, and identifying values that are missing or incorrect.

It is frequently possible to identify noteworthy trends or outliers by visualizing distributions or summary statistics.

You must manage missing data carefully because it can significantly impact model accuracy.

Common techniques include removing incomplete rows, interpolating for time series data, or mean or median imputation (filling in gaps with average values).

For instance, a weather dataset’s missing temperature values can be filled in using the readings from the day before and the day after.

Finding outliers is another crucial task. A negative age or an unusually high transaction value are examples of outliers that might skew model behavior.

Statistical techniques like Z-score thresholds and the interquartile range (IQR) can be used to eliminate or rectify problems.

Read Also: The Importance of Data Cleaning and How to Do It Effectively?

Step 2: Feature Creation

The next objective is to develop new features that more accurately depict underlying patterns after the data has been cleaned. Domain knowledge, or knowing the background of your data, is frequently necessary for this step.

For instance, you could calculate a purchase frequency ratio (total purchases divided by total visits) in an e-commerce dataset to show how engaged users are.

More effectively than raw figures, financial factors such as the debt-to-income ratio or monthly average balance can show creditworthiness.

To deal with skewed data, other feature engineering techniques include log transformations and interaction terms, which involve multiplying or combining characteristics to display relationships. The objective is to assist the model in identifying patterns lacking in the raw data.

Step 3: Feature Transformation

Feature transformation guarantees that each feature makes an equal contribution to the model. This is the point at which encoding and feature scaling become relevant.

Models such as Logistic Regression or K-Means perform better when numerical data is normalized (scaled between 0 and 1) and standardized (centered around mean 0, standard deviation 1).

Bias in training can result from high values dominating smaller ones in the absence of scaling.

Encoding is necessary for categorical data in order for models to interpret it numerically. While One-Hot Encoding generates binary columns for every category, perfectly suited for algorithms such as Decision Trees, Label Encoding gives categories numerical values.

Target Encoding captures deeper links in complicated datasets without inflating dimensionality by substituting the average target variable value for categories.

Step 4: Feature Selection

Performance isn’t always improved by having more features; some could introduce redundancy or noise. Methods for feature selection can assist you in retaining only the most instructive variables in this situation.

Feature selection strategies are often divided into three groups.

Filter methods (such as correlation, chi-square, and mutual information) rank variables according to their statistical relevance.

Wrapper methods, such as Recursive Feature Elimination (RFE), assess subsets of characteristics by repeatedly training models to discover the optimal combination.

Embedded methods, such as Lasso Regression or Tree-based models, choose characteristics for model training by punishing irrelevant ones.

These strategies streamline the model, eliminate overfitting, and improve prediction speed and interpretability.

Step 5: Feature Evaluation and Iteration

Feature engineering does not end with the creation and selection of features; it is a continuous review and refining cycle.

Models such as Random Forest, XGBoost, and LightGBM can be used to assess feature relevance by ranking variables based on their contribution to predictions.

Iterate after discovering the top-performing features: delete the weak ones, experiment with different transformations, and test again. This technique improves the accuracy and generalizability of your model over time.

In practice, the best outcomes come from experimentation, which combines creativity, statistical thinking, and domain understanding to continuously improve your feature set until the model works optimally.

Common Feature Engineering Techniques (With Examples)

Once you have learned the procedure, the next step is to learn the most useful feature transformation techniques.

These are the techniques for transforming data into relevant, model-friendly representations, and they are employed in almost every real-world machine learning effort.

The following are some of the most effective and popular feature engineering examples in Python and data science practice.

1. Binning (Discretization)

Binning makes models more resilient to small noise and fluctuations by converting continuous numerical input into discrete intervals.

You can make bins like 18–25, 26–40, and 41–60, for example, rather than utilizing raw ages (22, 34, 57). This is particularly helpful when you wish to simplify relationships or when algorithms are sensitive to outliers.

In Python, you can use pd.cut() or pd.qcut() in pandas for this transformation. For example:

df['age_group'] = pd.cut(df['age'], bins=[18, 25, 40, 60], labels=['Young', 'Adult', 'Senior'])

In addition to making data easier to understand, binning can reveal hidden trends, such as a sharp decline in sales for particular age groups.

2. Log and Power Transformations

For algorithms (such as linear regression or SVMs) that assume Gaussian distributions, modifications can make skewed data more “normal,” meaning that a small number of values dominate the distribution.

When transforming income or sales data, for example, the log transformation lessens the impact of extreme values, whereas power transformations, such as Box-Cox or Yeo-Johnson, stabilize variation across ranges.

df['sales_log'] = np.log1p(df['sales'])

By making the data more consistent and simpler for the model to understand, these changes increase the accuracy and stability of training.

3. Encoding Categorical Data

Numerous techniques for machine learning require numbers in order to process categorical input. This is when encoding is useful.

Label encoding, which is straightforward but may cause ordinal bias, gives each category a distinct integer.

For tree-based models, One-Hot Encoding is perfect since it generates distinct binary columns for every category.

In datasets with a large number of categories, Target Encoding captures deeper associations by substituting the average target value for that group for categories.

Example in Python using pandas and scikit-learn:

from sklearn.preprocessing import OneHotEncoder

encoder = OneHotEncoder()

encoded = encoder.fit_transform(df[['category']])

Each technique has a distinct function: Label Encoding is used for ordinal attributes like educational levels, Target Encoding is used for large category sets, and One-Hot is used for small ones.

4. Date and Time Features

Although they rarely directly assist models, raw timestamps can provide valuable insights when broken down. Patterns in behavior or time can be found by extracting year, month, weekday, hour, or even seasonality.

For example, in sales data, purchases might spike during weekends or festive seasons. You can extract such patterns easily in Python.

df['purchase_date'] = pd.to_datetime(df['purchase_date'])

df['weekday'] = df['purchase_date'].dt.day_name()

df['hour'] = df['purchase_date'].dt.hour

These time-based features often significantly enhance forecasting and demand prediction models.

5. Text Features

Although unstructured text data is extremely valuable, it must be transformed into numerical form. The following are two widely used feature transformation methods for text.

Term Frequency–Inverse Document Frequency, or TF-IDF, calculates a word’s relative importance in a document.

Word embeddings, such as Word2Vec, GloVe, or BERT, go beyond mere frequency counts to capture semantic meaning and relationships between words.

For example, using scikit-learn’s TF-IDF vectorizer:

from sklearn.feature_extraction.text import TfidfVectorizer

vectorizer = TfidfVectorizer(max_features=1000)

X_text = vectorizer.fit_transform(df['review_text'])

This allows models to understand sentiment, topics, and intent, crucial for NLP applications.

6. Dimensionality Reduction

Larger datasets frequently contain redundant or linked features that cause models to run more slowly. While maintaining important information, dimensionality reduction approaches aid in dataset simplification.

By projecting the variables onto a smaller set of uncorrelated components, Principal Component Analysis (PCA) lowers the number of variables.

High-dimensional data visualization in 2D or 3D space is made possible by t-SNE (t-Distributed Stochastic Neighbor Embedding), which is frequently used for exploratory data analysis.

from sklearn.decomposition import PCA

pca = PCA(n_components=2)

X_reduced = pca.fit_transform(X_scaled)

These techniques aid in enhancing model performance, decreasing overfitting, and simplifying the interpretation of feature correlations.

All data scientists should become proficient in these feature engineering techniques. With thoughtful engineering, data is transformed from raw to refined, handling skewed distributions, encoding categorical variables, and producing rich time-based or textual characteristics.

This is where machine learning models that are genuinely accurate and interpretable are created.

Automated Feature Engineering Tools

Making features by hand for each dataset can become laborious and prone to mistakes as machine learning projects get more sophisticated.

In order to save time and effort while preserving quality and consistency, software libraries known as automated feature engineering tools are used to automatically develop, choose, and assess new features.

In order to identify connections, interactions, and changes that human analysts might otherwise overlook, these tools employ algorithms and heuristics.

The most widely used tools and their applications in actual data science workflows will be discussed here.

1. Featuretools

For Python feature engineering, Featuretools is one of the most well-known open-source packages.

The technique it employs, known as Deep Feature Synthesis (DFS), aggregates and stacks relationships from several tables to automatically generate new features.

For instance, without requiring the human writing of intricate code, it can produce features like the average transaction value per customer or the number of purchases placed in the last 30 days.

It is ideal for large-scale automation and relational datasets.

A drawback is that it can produce a lot of features, which might necessitate further feature selection or filtering to prevent overfitting.

2. AutoFeat

Automatically creating and choosing mathematical modifications of preexisting characteristics is the main goal of AutoFeat.

For numerical datasets, where modifications like logarithmic, polynomial, or interaction terms might increase model accuracy, it’s particularly useful.

You can integrate it easily with scikit-learn workflows:

from autofeat import AutoFeatRegressor

model = AutoFeatRegressor()

X_new = model.fit_transform(X, y)

It is beneficial since it uses little code to simplify intricate feature changes.

Limitation: It performs best on small to medium-sized datasets; on really large ones, it can become computationally costly.

3. TSFresh

TSFresh (Time Series Feature extraction based on scalable hypothesis testing) is a potent option if you are working with time series data.

Hundreds of time-related parameters, including mean, standard deviation, autocorrelation, and entropy, are automatically calculated and ranked according to statistical importance.

Why it’s beneficial: Perfect for sensor-based analytics, predictive maintenance, and finance applications where time dependency is important.

Limitation: As a result of the large number of features generated, dimensionality reduction or selection is frequently required later.

4. PyCaret

Integrated within its end-to-end workflow, PyCaret is a low-code machine learning package that incorporates automated feature engineering.

It takes care of feature encoding, scaling, transformation, and even feature selection in the background so that users can concentrate on experimenting with the model rather than preprocessing.

It is excellent for rapid model comparison and prototyping.

Limitation: Less control over particular feature engineering logic; unsuitable for projects requiring domain-specific transformations or extensive customisation.

Best Practices and Expert Tips for Effective Feature Engineering

┌─────────────────────┐

│ Raw Data │

│ (Unstructured or │

│ messy inputs) │

└────────┬────────────┘

│

▼

┌─────────────────────┐

│ Data Cleaning & │

│ Preparation │

│ - Handle missing │

│ values & outliers │

│ - Fix inconsistencies│

└────────┬────────────┘

│

▼

┌─────────────────────┐

│ Feature Engineering │

│ - Create new features│

│ - Transform & scale │

│ - Encode categories │

└────────┬────────────┘

│

▼

┌─────────────────────┐

│ Machine Learning │

│ Model Training │

│ - Algorithms learn │

│ from features │

└────────┬────────────┘

│

▼

┌─────────────────────┐

│ Predictions / │

│ Insights │

│ - Model outputs │

│ useful results │

└─────────────────────┘

Feature Engineering Workflow

Although feature engineering requires experimentation and creativity, adhering to defined rules guarantees that your efforts result in dependable and regular improvements.

The expert-based feature engineering best practices listed below will assist you in creating data pipelines that improve interpretability and performance.

1. Start with Domain Understanding

You should take the time to comprehend the context of your data before writing any code. You may create features that have meaning when you understand how variables connect to the actual issue.

For instance, a combination of blood pressure and cholesterol levels may indicate cardiovascular risk in the healthcare industry; in e-commerce, the interval between repeat transactions can represent consumer loyalty.

Models run the risk of learning pointless connections when feature generation is done without domain expertise.

2. Keep Features Interpretable and Relevant

Performance gains are not always correlated with complex traits. Always strive for interpretability, or qualities that people can comprehend and explain. Interpretable features enable teams to confidently make business decisions and trust model outputs.

A feature should be excluded if it doesn’t clarify the data or if it has little relationship to the target variable. Recall that a small number of strong, relevant traits frequently perform better than a large number of random ones.

3. Avoid Data Leakage

Preventing data leakage, the unintentional influence of test set information on the training process, is one of the most important feature engineering strategies.

Overly optimistic model performance that doesn’t work in production can result from this. Scaling, encoding, and imputation transformations should only be applied to training data before being applied to validation and test sets to prevent leaking.

Always double-check time-based datasets because training features may contain information from the future.

4. Regularly Validate Model Improvements

Feature engineering needs to be a continuous process rather than a one-time event. Verify whether the features you add or change actually make the model better.

Assess the effects of changes using methods such as feature importance analysis, cross-validation, and performance tracking (e.g., accuracy, RMSE, or F1-score).

In the event that a feature causes instability or fails to enhance performance, it is advisable to remove it and proceed.

5. Document Every Transformation

Transparency is a hallmark of professional feature engineering. Keep track of every stage, including the encoding or scaling techniques used and the imputed missing values.

This helps teams subsequently debug or improve models and makes your procedure reproducible.

This tracking can be automated with pipeline techniques included in libraries like scikit-learn or MLflow, guaranteeing that no alteration is left unrecorded.

Common Mistakes to Avoid

When feature engineering, even seasoned data scientists might make small mistakes. Just as crucial as learning the proper techniques is knowing what not to do.

Here are some of the most frequent errors that might jeopardize the performance and dependability of your model, along with tips on how to prevent them.

1. Using Features That Leak Target Data

Data leaking happens when information from future data or the target variable inadvertently finds its way into your training features. As a result, while the model appears to be very accurate during training, it is utterly inaccurate in practical applications.

When anticipating loan approval, for example, it is evident to include a “loan approval status” column. However, if the date of approval occurs after the event you are projecting, it is also leaking.

Always appropriately separate training and testing data, and make sure that all designed characteristics are based solely on the data available at the time of prediction.

2. Over-Engineering Features

In an attempt to improve performance, it can be tempting to add dozens or even hundreds of additional features, but more isn’t necessarily better.

In addition to increasing computing costs and confusing the model, adding duplicate or unnecessary features can cause overfitting, a situation in which the model learns patterns from the training set but is unable to generalize.

Restraint is the answer: concentrate on significant changes supported by correlation or logic, and use feature selection strategies to eliminate superfluous complexity.

3. Ignoring Scaling or Normalization

The behavior of algorithms that rely on distance or gradient-based optimization (such as logistic regression, SVMs, or K-Means) can be unpredictable when features exist on widely disparate scales, such as age in years and income in lakhs.

If scaling or normalization are not used, results may be skewed as large-valued characteristics take precedence over smaller ones. Numerical data should always be normalized or standardized before training, and test sets should also undergo the same modification.

4. Failing to Cross-Validate Transformations

The cross-validation cycle should include feature changes, although many novices overlook this. For instance, data leakage may occur if the full dataset is scaled or encoded before being split.

This is because information from the test fold may affect the training fold. Use pipelines to make sure that just the training data within each fold is used to learn all transformations (scaling, encoding, and imputation).

Conclusion

Feature engineering connects raw data with actionable insights. As we have observed, well-constructed features can significantly increase model performance, interpretability, and assure improved generalization on previously unseen data.

From adding new variables to modifying, scaling, and picking the appropriate features, each step provides clarity and predictive power that algorithms alone cannot provide.

Feature engineering is fundamentally a science and an art. It uses statistical tools, subject knowledge, and creative thinking to discover hidden patterns in data.

There is no one-size-fits-all method; the most effective features are often discovered through testing, iteration, and careful analysis.

The key to success for aspiring machine learning and data science professionals is to practice regularly. Examine various transformations, investigate datasets, and assess the impact of each designed feature on model accuracy.

This practical experience develops your intuition and hones your ability to create features that are actually important over time.

In your next machine learning project, start small, maintain your curiosity, and implement these feature engineering principles. This is because the quality of your features frequently determines the accuracy of your predictions.

FAQs: Feature Engineering in Machine Learning

What is the main goal of feature engineering in machine learning?

Converting unstructured data into useful features that improve a model’s capacity to identify trends and generate precise predictions is the major objective. Performance and interpretability are enhanced, noise is decreased, and significant trends are highlighted via effective feature engineering.

How does feature engineering improve model accuracy?

By eliminating unnecessary noise, feature engineering assists models in concentrating on essential patterns. Models can lessen overfitting, increase predictive accuracy, and improve generalization by generating meaningful variables, converting skewed data, and choosing the most useful features.

What are the best feature engineering techniques for beginners?

Essential techniques such as resolving missing values, encoding categorical variables, scaling numerical data, and developing new features with domain knowledge are good places for beginners to start. The majority of data pretreatment operations are built around these methods, which also increase model readiness.

Share Now

More Articles

What is Data Storytelling? A Guide to Turning Numbers into Narratives

Advanced Data Science Courses On Coursera For Experienced Professionals

Will AI Take My Job or Create a New One? A Deep Dive into the Post-AI Job Market

Discover more from coursekart.online

Subscribe to get the latest posts sent to your email.